Quantity takeoff (QTO) is the foundation of construction cost estimation, another critical task. For decades, professionals have sought to streamline QTO through technology, aiming for greater speed, consistency, and accuracy.

Yet despite advances in digital tools, the profession is still in flux. A 2023 global study by RICS and Glodon found that only 39% of surveyed organizations use QTO and estimating software integrated with BIM or Common Data Environments. Another 31% rely on standalone tools, and 30% still don’t use specialized software at all.

As someone who’s witnessed the evolution of QTO technologies since the 1980s, from digitizing tablets to today’s AI-powered platforms, I find this gap telling. Now that machine learning and large language models are entering the mix, the big question is: Will AI finally automate the estimation process or just become another tool in the estimator’s kit?

From physical to digital

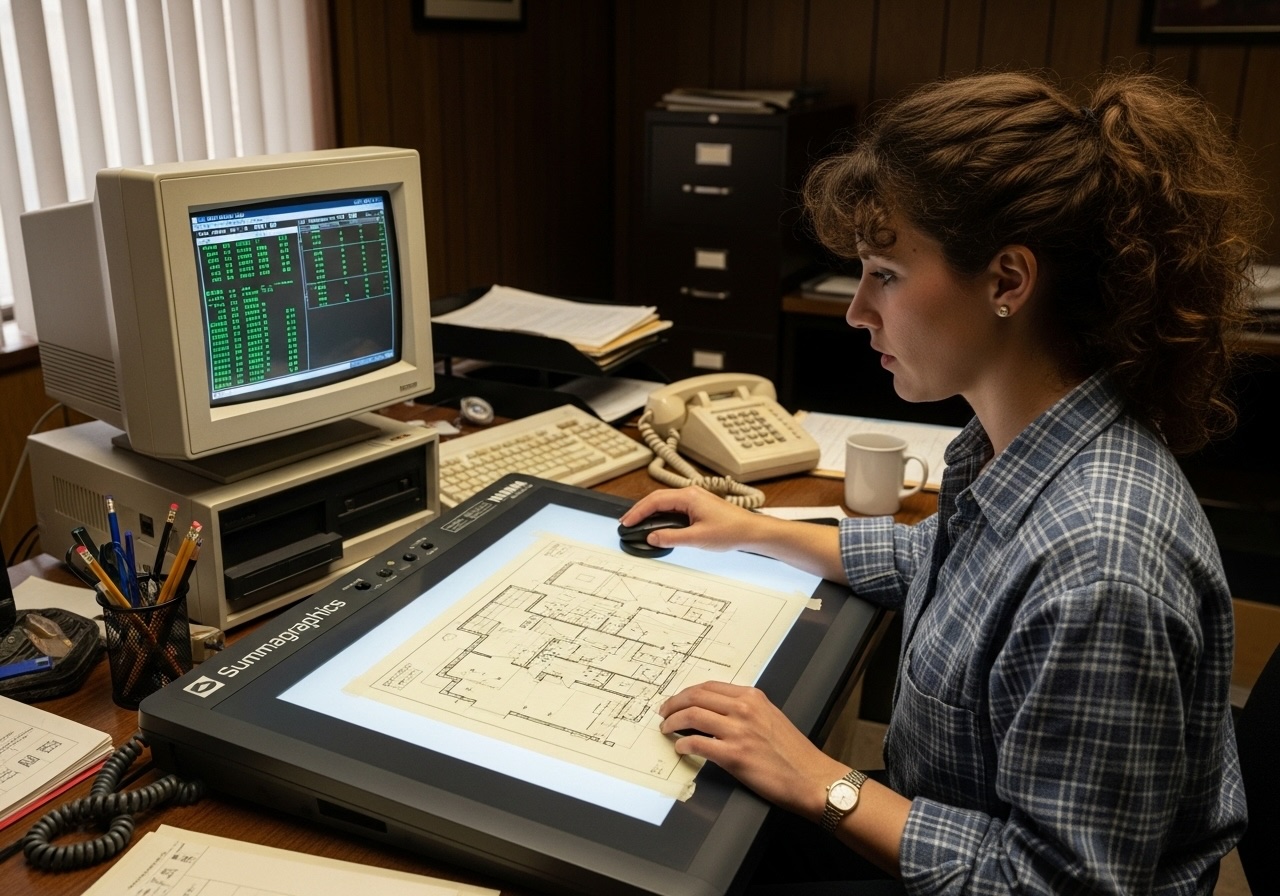

In the 1980s and 1990s, high-tech QTOs used large CalComp or Summagraphics digitizing tablets connected to PCs running specialized software. The takeoff technician would attach a blueprint to the tablet, scale it, and manually trace along walls, floors, windows, and other features using a stylus.

Each element was then classified, e.g., internal wall, external wall, floor slab, within the software, typically with limited automation. Pricing data would be compiled separately using tools like early Excel macros, WinQS, or Timberline Estimating. These setups were powerful for their time but required significant manual effort.

CAD QTOs

I started using CAD in the mid-1980s. Initially, CAD was primarily for digital drafting, but it soon became an information management tool. You could attach properties and metadata to graphical elements, which enabled automated area calculations and rudimentary Bills of Quantities (BOQs).

Takeoff specialists could pull measurements directly from CAD drawings, eliminating the need for physical blueprints. While AutoCAD introduced 3D surface modeling in 1985, practical 3D use cases for QTOs only became viable in the late 1990s with the advent of purpose-built tools like AutoCAD Architecture and, later, Revit.

Enter BIM-based estimation

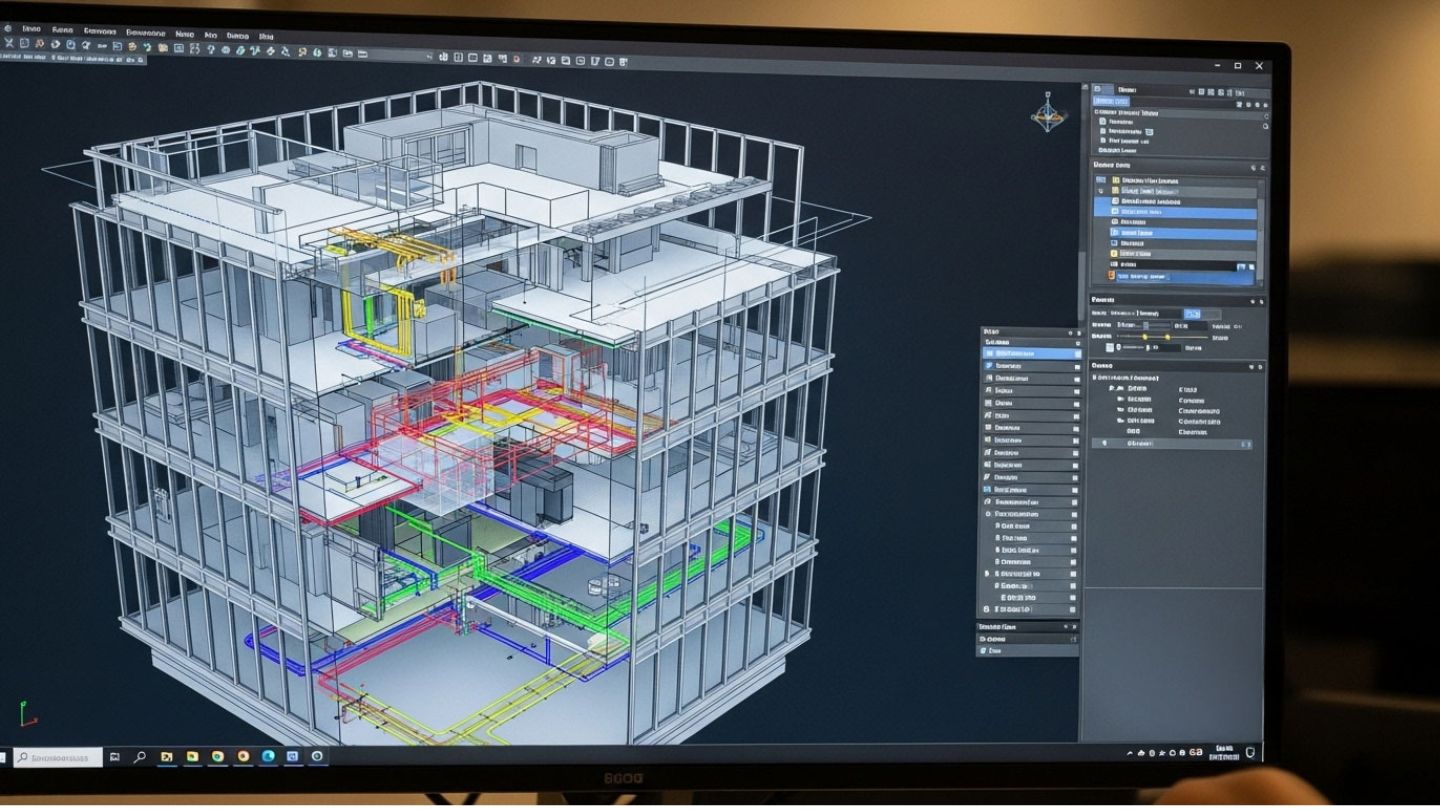

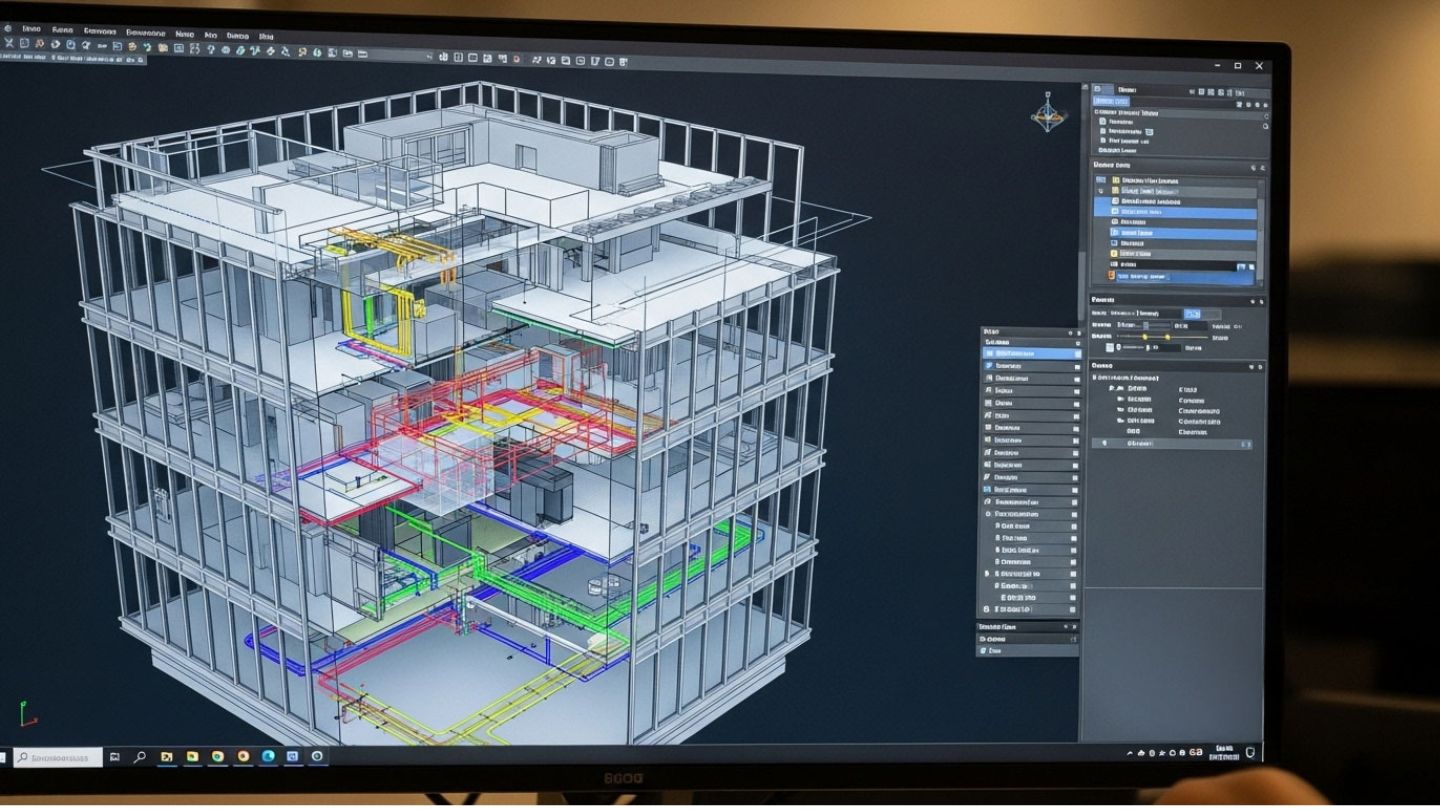

Building Information Modeling marked a turning point. A BIM model contains detailed data about building components along with precise 3D geometry, which enables automatic BOQ generation and structured quantity calculations.

In the early 2010s, my client Tocoman pioneered BIM-based estimation using ArchiCAD models. Adoption was initially slow, but today many firms use BIM for QTOs with tools like Solibri, Autodesk Takeoff, Cubit, and others.

However, a significant challenge remains: most designers do not model with QTOs in mind. Visually impressive models often lack the object-level detail or classification required for construction-ready quantity extraction. As a result, contractors frequently rebuild models to meet their standards, especially where quantity-linked procurement or production is involved.

It’s also worth noting that interoperability is an ongoing issue; IFC (Industry Foundation Classes) and classification standards like UniFormat or OmniClass help, but aren’t always followed consistently.

The PDF dominance

Despite BIM’s promise, PDFs remain the de facto standard for exchanging drawings. They’re easy to view and annotate without needing CAD or BIM software, making them widely accessible.

Many contractors use QTO software that interprets 2D PDF floor plans for annotations and measurements. The result is a categorized, itemized list of building components and materials. Some tools, like RIB Candy, allow seamless workflows from takeoff to cost estimating and planning within the same environment.

Still, human interpretation is critical. Even the most advanced software can struggle with drawing inconsistencies, overlapping symbols, or scale issues. The estimator’s expertise remains central.

AI-powered tools: Back to 2D!

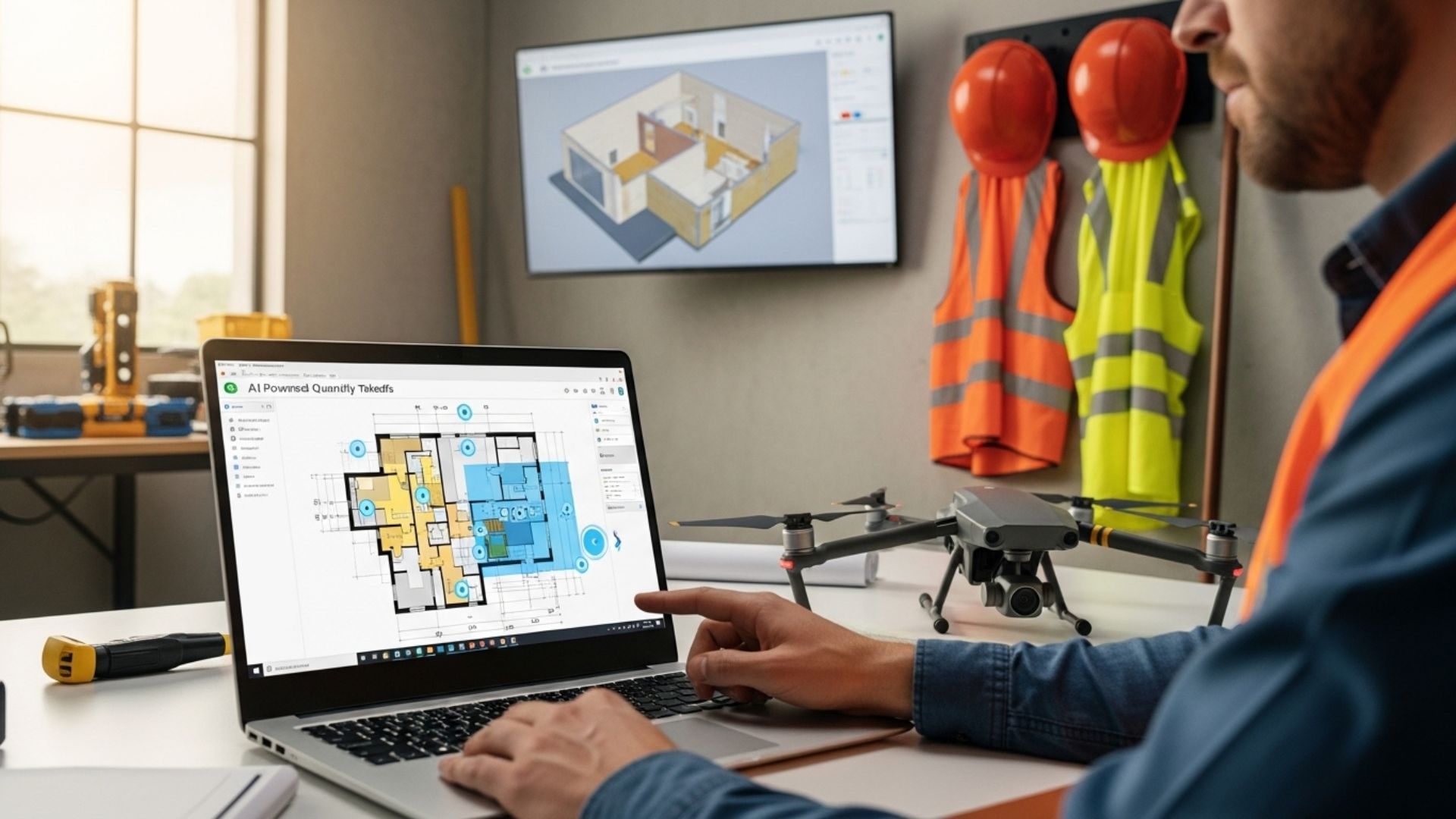

With AI’s rise, it was only a matter of time before QTO and estimation became targets for automation. Today, machine learning tools interpret 2D PDFs to generate BOQs and cost estimates, simulating how an estimator would analyze a drawing.

TaksoAI, for instance, is an AI-powered MEP takeoff solution that automatically detects over 60 pipe and HVAC fittings from 2D drawings.

Paradoxically, these PDFs originate from CAD or BIM files. We’ve effectively stripped away data only to reconstruct it through machine learning. While effective in some cases, this feels like a transitory phase. A properly structured BIM model could deliver far richer and more reliable input for QTO.

Some companies are already bridging the gap. Kreo BIM Takeoff, for example, integrates BIM and AI in a cloud-based platform to extract quantities from 3D models.

LLMs for quick estimates

Large Language Model applications like ChatGPT can generate early-stage estimates from building descriptions. Provide a building type, sizing, and location, and you’ll receive a space program, technical assumptions, regulatory context, and even a rough budget.

While impressive, these outputs aren’t yet sufficient for professional use. Estimators require traceable, vetted data sources and localized cost libraries, something generic LLMs can’t provide. To overcome this, some firms have built custom GPTs or RAG (Retrieval-Augmented Generation) systems using their proprietary estimation data.

LLMs are not replacements for estimation software, but they can support preliminary feasibility studies, client presentations, or serve as intelligent front ends to structured estimation workflows.

Will quantity surveyors become obsolete?

QTOs and cost estimates are, at their core, structured numerical data. That makes them ideal candidates for automation. However, reliable cost estimation goes beyond measurement.

For example, installing ductwork in a 3-meter-high ceilinged office is far less complex than doing so in a 12-meter-high hangar. That difference isn’t visible in 2D drawings or models; it requires understanding site conditions, installation logistics, and crew productivity.

Other key factors, such as client requirements, local regulations, labor rates, supplier pricing volatility, also complicate automation. Yet AI is advancing fast. Given sufficient data, intelligent systems may one day account for these variables.

Still, the final responsibility and liability for cost decisions remains with the human professional. As long as money, contracts, and risk are involved, humans will want to validate estimates. Perhaps a second AI will eventually be deployed to audit the first.

Automating with standardized data

Currently, quantities are measured around a dozen times during a project’s lifecycle, with varying results each time. The goal should not just be to speed up these QTOs but to eliminate most of them through data continuity.

As I’ve written previously, the Finnish construction sector is therefore standardizing the way we present product data over the project life cycle. That will enable reliable AI-powered automation of various functions across the supply chain, including QTOs and estimations.

Fully automated QTO and estimation is not a question of if, but when. But instead of eliminating the role of the estimator, AI may elevate it.

In the future, estimators will likely supervise, verify, and fine-tune AI-generated results, focusing on higher-order decisions rather than manual measurement.

View the original article and our Inspiration here

Leave a Reply